Search This Blog

My perspective on the law, politics, drawing, writing, and other things. Formerly Southern Lawyer NC.

Posts

Federal Judges and Congress Don't Care About Your Privacy

- Get link

- X

- Other Apps

Why are big banks so bad at data privacy?

- Get link

- X

- Other Apps

Why Should You Care About Security and Data Privacy?

- Get link

- X

- Other Apps

Discussion with ChatGPT Regarding AI in Legal Research and Writing

- Get link

- X

- Other Apps

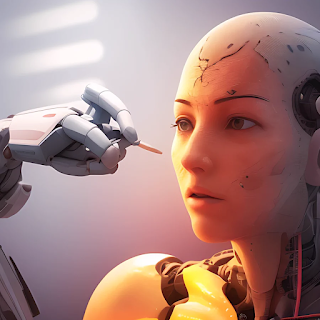

The Rise of AI and the Threat to Humanity

- Get link

- X

- Other Apps